HYPOTHESIS TESTING:Definition,Testing a Statistical Hypothesis,Regression Analysis and Common Abuses of Regression.

HYPOTHESIS TESTING

Population parameters (say mean, variance) of any characteristic which are of relevance in most of the statistical studies are rarely known with certainty and thus estimated based on sample information. Estimation of the parameter can be a point estimate or an interval estimate (with confidence interval). However, many problems in engineering science and management require that we decide whether to accept or reject a statement about some parameter(s) of interest. The statement which is challenged is known as a null hypothesis, and the way of decision-making procedure is so-called hypothesis testing. This is one of the most useful techniques for statistical inference. Many types of decision-making problems in the engineering science can be formulated as hypothesis-testing problems. If an engineer is interested in comparing mean of a population to a specified value. These simple comparative experiments are frequently encountered in practice and provide a good foundation for the more complex experimental design problems that will be discussed subsequently. In the initial part of our discussion, we will discuss comparative experiments involving either one or two populations, and our focus is on testing hypothesis concerning the parameters of the population(s). We now give a formal definition of a statistical hypothesis.

Definition

A statistical hypothesis is a statement about parameter(s) of one or more populations.

For example, suppose that we are interested in burning rate of a solid propellant used to power aircrew escape systems. Now burning rate is a random variable that can be described by a probability distribution. Suppose that our interest focuses on mean burning rate (a parameter of this distribution). Specifically, we are interested in deciding whether or not the mean burning rate is 60 cm/s. We may express this formally as

H0 : µ = 60 cm/s

H1 : µ ≠ 60 cm/s

The statement

H0 : µ = 60 cm/s

is called the null hypothesis, and the statement

H1 : µ ≠ 60 cm/s

is so-called the alternative hypothesis. Since alternative

hypothesis specifies values of µ that could be either greater or less than 60 cm/s, it is called a two-sided alternative hypothesis. In some situations, we may wish to formulate a one-sided alternative hypothesis, as

H0 : µ = 60 cm/s

H1 : µ < 60 cm/s

Or

H0 : µ = 60 cm/s

H1 : µ > 60 cm/s

It is important to remember that hypotheses are always statements about the population or distribution under study, AND not statements about the sample. An experimenter generally believes the alternate hypothesis to be true. Hypothesis-testing procedures rely on using information in the random sample from the population of interest. Population (finite or infinite) information is impossible to collect. If this information is consistent with the null hypothesis then we will conclude that the null hypothesis is true: however if this information is inconsistent with null hypothesis, we will conclude that there is little evidence to support null hypothesis.

The structure of hypothesis-testing problems is generally identical in all engineering/science applications that are considered. Rejection of null hypothesis always leads to accepting alternative hypothesis. In our treatment of

hypothesis testing, null hypothesis will always be stated so that it specifies an exact value of the parameter (as in the statement H0 : µ = 60 cm/s ). The alternative hypothesis will allow the parameter to take on several values (as in the statement H1 : µ ≠ 60 cm/s ). Testing the hypothesis involves taking a random sample, computing a test statistic from the sample data, and using the test statistic to make a decision about the null hypothesis.

Testing a Statistical Hypothesis

To illustrate the general concepts, consider the propellant burning rate problem introduced earlier. The null hypothesis is that the mean burning rate is 60 cm/s. and the alternative is that it is not equal to 60 cm/s. That is, we wish to test

H0 : µ = 60 cm/s

H1 : µ ≠ 60 cm/s

Suppose that a sample of n=10 specimens is tested and that the sample mean burning rate X is observed. The sample mean is an estimate of the true population mean µ . A value of the sample mean X that falls close to the hypothesized value of µ = 60 cm / s is evidence that the true mean µ is really 60 cm/s: that is, such evidence supports the null hypothesis H0 . On the other hand, a sample mean that is considerably different from 60 cm/s is

evidence in support of the alternative hypothesis,

H1 . Thus sample mean is the test statistic in

this case.

Varied Sample may have varied mean values. Suppose that if

58.5 ≤ x ≤ 61.5 , we will

H0 : µ = 60 , and if either

we will accept the alternative hypothesis

H1 : µ ≠ 60 . The values of X that are less than 58.5

and greater than 61.5 constitute the rejection region for the test, while all values that are in the interval 58.5 to 61.5 forms acceptance region. Boundaries between critical regions and acceptance region are so-called ‘critical values’. In our example the critical values are 58.5 and 61.5. Thus, we reject Ho in favor of H1 if the test statistic falls in the critical region and accept Ho otherwise. This interval or region of acceptance is defined based on the concept of confidence interval and level of

significance for the test. More details on confidence interval and level of significance can be found in various web link (http://en.wikipedia.org/wiki/Confidence_interval; http://www.youtube.com/watch?v=iX0bKAeLbDo) and book (Montgomery and Runger, 2010).

Hypothesis decision procedure can lead to either of two wrong conclusions. For example, true mean burning rate of the propellant could be equal to 60 cm/s. However, for randomly selected propellant samples that are tested, we could observe a value of the

test statistic, X , that falls into the critical region. We would then reject the null hypothesis H0

in favor of the alternative,

H1 , when, in fact Ho is really true. This type of wrong conclusion is

called a Type I error. This is a more serious mistake as compared to another error Type II explained below.

Now suppose that true mean burning rate is different from 60 cm/s, yet sample

mean X falls in the acceptance region. In this case we would accept This type of wrong conclusion is called a Type II error.

H0 when it is false.

Thus, in testing any statistical hypothesis, four different situations determine whether

final decision is correct or error. These situations are presented in Table 2- 1.

Because our decision is based on random variables, probabilities can be associated with the Type I and Type II errors. The probability of making a Type I error is denoted by Greek letterá . That is,

á = P (Type I error ) = P (Reject H0 | H0

is true)

Sometimes the Type I error probability is called the significance level (α) or size of the test.

The steps followed in hypothesis testing are

(i) Specify the Null and Alternate hypothesis

(ii) Define level of significance(α) based on criticality of the experiment

(iii) Decide the type of test to be used (left tail, right tail etc.)

(iv) Depending on the test to be used, sample distribution, mean variance information, define the appropriate test statistic (z-test, t-test etc.)

(v) Considering the level of significance (α), define the critical values by looking into standard statistical tables.

(vi) Decide on acceptance or rejection of null/ alternate hypothesis by comparing test statistic values calculated from samples, and as defined in step (iv), with standard value specified in statistical table.

(vii) Derive meaningful conclusion.

Regression Analysis

In many situations, two or more variables are inherently related, and it is necessary to explore nature of this relationship (linear or nonlinear). Regression analysis is a statistical technique for investigating the relationship between two or more variables. For example, in a chemical process, suppose that yield of a product is related to process operating temperature. Regression analysis can be used to build a model (response surface) to predict yield at a given temperature level. This response surface can also be used for further process optimization, such as finding the level of temperature that maximizes yield, or for process control purpose.

Let us look into Table 2-2 with paired data collected on % Hydrocarbon levels (say x variable) and corresponding Purity % of Oxygen (say, y variable) produced in a chemical distillation process. The analyst is interested to estimate and predict the value of y for a given level of x, within the range of experimentation.

Table 2-2 Data Collected on % Hydrocarbon levels (x) and Purity % of Oxygen (y)

| Observation number | Hydrocarbon level x (%) | Purity y (%) | Observation number | Hydrocarbon level x (%) | Purity y (%) |

| 1 | 0.99 | 90.1 | 11 | 1.19 | 93.54 |

| 2 | 1.0 | 89.05 | 12 | 1.15 | 92.52 |

| 3 | 1.15 | 91.5 | 13 | 0.97 | 90.56 |

| 4 | 1.29 | 93.74 | 14 | 1.01 | 89.54 |

| 5 | 1.44 | 96.73 | 15 | 1.11 | 89.85 |

| 6 | 1.36 | 94.45 | 16 | 1.22 | 90.39 |

| 7 | 0.87 | 87.59 | 17 | 1.26 | 93.25 |

| 8 | 1.23 | 91.77 | 18 | 1.32 | 93.41 |

| 9 | 1.55 | 99.42 | 19 | 1.43 | 94.98 |

| 10 | 1.4 | 93.65 | 20 | 0.95 | 87.33 |

Scatter diagram is a firsthand visual tool to understand the type of relationship, and then regression analysis is recommended for developing any prediction model. Inspection of this scatter diagram (given in Figure 2-16) indicates that although no simple curve will pass exactly through all the points, there is a strong trend indication that the points lie scattered randomly along a straight line.

Therefore, it is probably reasonable to assume that mean of the random variable Y is related to x by following straight-line relationship. This can be expressed as

where, regression coefficient β0 is so-called intercept and β1 is the slope of the line. Slope and intercept is calculated based on a ordinary least square method. While the mean of Y is a linear function of x, the actual observed value y does not fall exactly on a straight line. The appropriate way to generalize this to a probabilistic linear model is to assume that the expected value of Y is a linear function of x. But for a particular value of x, actual value of Y is determined by mean value from the linear regression model plus a random error term,

where å is the random error term. We call this model as simple regression model, because it has only one independent variable(x) or regressor. Sometimes a model like this will arise from a theoretical relationship. Many times, we do not have theoretical knowledge of the relationship between x and y and the choice of the model is based on inspection of a scatter diagram, such as we did with the oxygen purity data. We then think of the linear regression model as an empirical model with uncertainty (error).

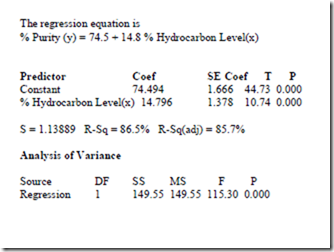

The regression option in MINITAB can be used to get all the results of the model. The results as derived from MINITAB-REGRESSION option using oxygen purity data set is provided below.

We look into R-Sq value and if it is more than 70%, we assume the relationship is linear and y depends on x. More details on interpretation of other values are given in MINITAB help menu or readers can refer to any standard statistical or quality management book.

In this context, P-value and its interpretation is important from the context of hypothesis testing and regression analysis. General interpretation is that if the P-value is less than 0.05 (at 5% level of significance test) the NULL HYPOTHESIS is to be rejected. Reader may refer web (http://www.youtube.com/watch?v=lm_CagZXcv8; http://www.youtube.com/watch?v=TWmdzwAp88k ) for more details on P-value and its interpretation.

Common Abuses of Regression

Regression is widely used and frequently misused. Care should be taken in selecting variables with which to construct regression equations and in determining form of a model. It is possible to develop statistical relationships among variables that are completely unrelated in a practical sense, For example, we might attempt to relate shear strength of spot welds with number of boxes of computer paper used by information systems group. A straight line may even appear to provide a good fit to the data, but the relationship is an unreasonable one. A strong observed association between variables does not necessarily imply that a causal relationship exists between those variables. Designed experimentation is the only way to prove causal relationships.

Regression relationships are also valid only for values of the regressor variable within range of original experimental/actual data. But it may be unlikely to remain so as we extrapolate. That is, if we use values of x beyond the range of observations, we become less certain about the validity of the assumed model and its prediction.

Comments

Post a Comment